Oracle 19c RAC 설치 with Oracle Linux 8.2 - 2 (Grid Infrastructure)

2. Grid Infrastructure 설치 파일 unzip

5. GI(Grid Infrastructure) 설치 실행

1. 설치 디렉토리 생성

mprac1.localdomain@root:/root> mkdir -p /u01/app/19.0.0/grid mprac1.localdomain@root:/root> mkdir -p /u01/app/grid mprac1.localdomain@root:/root> mkdir -p /u01/app/oracle/product/19.0.0/db_1 mprac1.localdomain@root:/root> chown -R grid:oinstall /u01 mprac1.localdomain@root:/root> chown -R oracle:oinstall /u01/app/oracle mprac1.localdomain@root:/root> chmod -R 775 /u01/

2. Grid Infrastructure 설치 파일 unzip

mprac1.localdomain@root:/root> mv LINUX.X64_193000_grid_home.zip /u01/app/19.0.0/grid/ mprac1.localdomain@root:/root> chown grid:oinstall /u01/app/19.0.0/grid/LINUX.X64_193000_grid_home.zip mprac1.localdomain@root:/root> su - grid mprac1.localdomain@grid:+ASM1:/home/grid> cd /u01/app/19.0.0/grid/ mprac1.localdomain@grid:+ASM1:/u01/app/19.0.0/grid> unzip LINUX.X64_193000_grid_home.zip

3. root 계정으로 cvuqdisk rpm 설치

mprac1.localdomain@grid:+ASM1:/u01/app/19.0.0/grid> exit logout mprac1.localdomain@root:/root> cd /u01/app/19.0.0/grid/cv/rpm mprac1.localdomain@root:/u01/app/19.0.0/grid/cv/rpm> cp -p cvuqdisk-1.0.10-1.rpm /tmp mprac1.localdomain@root:/u01/app/19.0.0/grid/cv/rpm> scp -p cvuqdisk-1.0.10-1.rpm mprac2:/tmp cvuqdisk-1.0.10-1.rpm 100% 11KB 9.5MB/s 00:00 mprac1.localdomain@root:/u01/app/19.0.0/grid/cv/rpm> rpm -Uvh /tmp/cvuqdisk-1.0.10-1.rpm Verifying... ################################# [100%] Preparing... ################################# [100%] Using default group oinstall to install package Updating / installing... 1:cvuqdisk-1.0.10-1 ################################# [100%] mprac1.localdomain@root:/u01/app/19.0.0/grid/cv/rpm>

2번 노드에서도 패키지를 설치한다.

mprac2.localdomain@root:/root> rpm -Uvh /tmp/cvuqdisk-1.0.10-1.rpm Verifying... ################################# [100%] Preparing... ################################# [100%] Using default group oinstall to install package Updating / installing... 1:cvuqdisk-1.0.10-1 ################################# [100%] mprac2.localdomain@root:/root>

4. runcluvfy 수행

1) runcluvfy 수행시 resolv.conf 등 파일을 scp를 통해 2번 노드에서 1번 노드로 copy 해 와야 하는데 Open-SSH 8.x 버전의 이슈로 에러 발생 이슈가 있어 이를 먼저 해결하는 workround 적용한다. root 계정으로 아래 작업 수행한다.

mprac1.localdomain@root:/root> cp -p /usr/bin/scp /usr/bin/scp-original mprac1.localdomain@root:/root> echo "/usr/bin/scp-original -T \$*" > /usr/bin/scp mprac1.localdomain@root:/root> cat /usr/bin/scp /usr/bin/scp-original -T $*

2) runcluvfy 실행

mprac1.localdomain@root:/root> su - grid mprac1.localdomain@grid:+ASM1:/home/grid> oh mprac1.localdomain@grid:+ASM1:/u01/app/19.0.0/grid> ./runcluvfy.sh stage -pre crsinst -n mprac1,mprac2 -method root -verbose Enter "ROOT" password: ERROR: PRVG-10467 : The default Oracle Inventory group could not be determined. Verifying Physical Memory ... Node Name Available Required Status ------------ ------------------------ ------------------------ ---------- mprac2 9.4488GB (9907820.0KB) 8GB (8388608.0KB) passed mprac1 9.4488GB (9907820.0KB) 8GB (8388608.0KB) passed Verifying Physical Memory ...PASSED Verifying Available Physical Memory ... Node Name Available Required Status ------------ ------------------------ ------------------------ ---------- mprac2 8.1019GB (8495408.0KB) 50MB (51200.0KB) passed mprac1 7.8059GB (8185072.0KB) 50MB (51200.0KB) passed Verifying Available Physical Memory ...PASSED Verifying Swap Size ... Node Name Available Required Status ------------ ------------------------ ------------------------ ---------- mprac2 20GB (2.0971516E7KB) 9.4488GB (9907820.0KB) passed mprac1 20GB (2.0971516E7KB) 9.4488GB (9907820.0KB) passed Verifying Swap Size ...PASSED Verifying Free Space: mprac2:/usr,mprac2:/var,mprac2:/etc,mprac2:/sbin,mprac2:/tmp ... Path Node Name Mount point Available Required Status ---------------- ------------ ------------ ------------ ------------ ------------ /usr mprac2 / 64.8184GB 25MB passed /var mprac2 / 64.8184GB 5MB passed /etc mprac2 / 64.8184GB 25MB passed /sbin mprac2 / 64.8184GB 10MB passed /tmp mprac2 / 64.8184GB 1GB passed Verifying Free Space: mprac2:/usr,mprac2:/var,mprac2:/etc,mprac2:/sbin,mprac2:/tmp ...PASSED Verifying Free Space: mprac1:/usr,mprac1:/var,mprac1:/etc,mprac1:/sbin,mprac1:/tmp ... Path Node Name Mount point Available Required Status ---------------- ------------ ------------ ------------ ------------ ------------ /usr mprac1 / 51.8668GB 25MB passed /var mprac1 / 51.8668GB 5MB passed /etc mprac1 / 51.8668GB 25MB passed /sbin mprac1 / 51.8668GB 10MB passed /tmp mprac1 / 51.8668GB 1GB passed Verifying Free Space: mprac1:/usr,mprac1:/var,mprac1:/etc,mprac1:/sbin,mprac1:/tmp ...PASSED Verifying User Existence: grid ... Node Name Status Comment ------------ ------------------------ ------------------------ mprac2 passed exists(1001) mprac1 passed exists(1001) Verifying Users With Same UID: 1001 ...PASSED Verifying User Existence: grid ...PASSED Verifying Group Existence: asmadmin ... Node Name Status Comment ------------ ------------------------ ------------------------ mprac2 passed exists mprac1 passed exists Verifying Group Existence: asmadmin ...PASSED Verifying Group Existence: asmdba ... Node Name Status Comment ------------ ------------------------ ------------------------ mprac2 passed exists mprac1 passed exists Verifying Group Existence: asmdba ...PASSED Verifying Group Membership: asmdba ... Node Name User Exists Group Exists User in Group Status ---------------- ------------ ------------ ------------ ---------------- mprac2 yes yes yes passed mprac1 yes yes yes passed Verifying Group Membership: asmdba ...PASSED Verifying Group Membership: asmadmin ... Node Name User Exists Group Exists User in Group Status ---------------- ------------ ------------ ------------ ---------------- mprac2 yes yes yes passed mprac1 yes yes yes passed Verifying Group Membership: asmadmin ...PASSED Verifying Run Level ... Node Name run level Required Status ------------ ------------------------ ------------------------ ---------- mprac2 5 3,5 passed mprac1 5 3,5 passed Verifying Run Level ...PASSED Verifying Users With Same UID: 0 ...PASSED Verifying Current Group ID ...PASSED Verifying Root user consistency ... Node Name Status ------------------------------------ ------------------------ mprac2 passed mprac1 passed Verifying Root user consistency ...PASSED Verifying Package: cvuqdisk-1.0.10-1 ... Node Name Available Required Status ------------ ------------------------ ------------------------ ---------- mprac2 cvuqdisk-1.0.10-1 cvuqdisk-1.0.10-1 passed mprac1 cvuqdisk-1.0.10-1 cvuqdisk-1.0.10-1 passed Verifying Package: cvuqdisk-1.0.10-1 ...PASSED Verifying Host name ...PASSED Verifying Node Connectivity ... Verifying Hosts File ... Node Name Status ------------------------------------ ------------------------ mprac1 passed mprac2 passed Verifying Hosts File ...PASSED Interface information for node "mprac2" Name IP Address Subnet Gateway Def. Gateway HW Address MTU ------ --------------- --------------- --------------- --------------- ----------------- ------ ens192 192.168.45.202 192.168.0.0 0.0.0.0 192.168.45.105 00:50:56:96:29:06 1500 ens224 1.1.1.202 1.1.1.0 0.0.0.0 192.168.45.105 00:50:56:96:57:17 9000 Interface information for node "mprac1" Name IP Address Subnet Gateway Def. Gateway HW Address MTU ------ --------------- --------------- --------------- --------------- ----------------- ------ ens192 192.168.45.201 192.168.0.0 0.0.0.0 192.168.45.105 00:50:56:96:51:B1 1500 ens224 1.1.1.201 1.1.1.0 0.0.0.0 192.168.45.105 00:50:56:96:05:17 9000 Check: MTU consistency of the subnet "1.1.1.0". Node Name IP Address Subnet MTU ---------------- ------------ ------------ ------------ ---------------- mprac2 ens224 1.1.1.202 1.1.1.0 9000 mprac1 ens224 1.1.1.201 1.1.1.0 9000 Check: MTU consistency of the subnet "192.168.0.0". Node Name IP Address Subnet MTU ---------------- ------------ ------------ ------------ ---------------- mprac2 ens192 192.168.45.202 192.168.0.0 1500 mprac1 ens192 192.168.45.201 192.168.0.0 1500 Verifying Check that maximum (MTU) size packet goes through subnet ...PASSED Source Destination Connected? ------------------------------ ------------------------------ ---------------- mprac1[ens224:1.1.1.201] mprac2[ens224:1.1.1.202] yes Source Destination Connected? ------------------------------ ------------------------------ ---------------- mprac1[ens192:192.168.45.201] mprac2[ens192:192.168.45.202] yes Verifying subnet mask consistency for subnet "1.1.1.0" ...PASSED Verifying subnet mask consistency for subnet "192.168.0.0" ...PASSED Verifying Node Connectivity ...PASSED Verifying Multicast or broadcast check ... Checking subnet "1.1.1.0" for multicast communication with multicast group "224.0.0.251" Verifying Multicast or broadcast check ...PASSED Verifying Network Time Protocol (NTP) ...PASSED Verifying Same core file name pattern ...PASSED Verifying User Mask ... Node Name Available Required Comment ------------ ------------------------ ------------------------ ---------- mprac2 0022 0022 passed mprac1 0022 0022 passed Verifying User Mask ...PASSED Verifying User Not In Group "root": grid ... Node Name Status Comment ------------ ------------------------ ------------------------ mprac2 passed does not exist mprac1 passed does not exist Verifying User Not In Group "root": grid ...PASSED Verifying Time zone consistency ...PASSED Verifying Time offset between nodes ...PASSED Verifying resolv.conf Integrity ... Node Name Status ------------------------------------ ------------------------ mprac1 passed mprac2 passed checking response for name "mprac2" from each of the name servers specified in "/etc/resolv.conf" Node Name Source Comment Status ------------ ------------------------ ------------------------ ---------- mprac2 192.168.45.105 IPv4 passed checking response for name "mprac1" from each of the name servers specified in "/etc/resolv.conf" Node Name Source Comment Status ------------ ------------------------ ------------------------ ---------- mprac1 192.168.45.105 IPv4 passed Verifying resolv.conf Integrity ...PASSED Verifying DNS/NIS name service ...PASSED Verifying Domain Sockets ...PASSED Verifying /boot mount ...PASSED Verifying Daemon "avahi-daemon" not configured and running ... Node Name Configured Status ------------ ------------------------ ------------------------ mprac2 no passed mprac1 no passed Node Name Running? Status ------------ ------------------------ ------------------------ mprac2 no passed mprac1 no passed Verifying Daemon "avahi-daemon" not configured and running ...PASSED Verifying Daemon "proxyt" not configured and running ... Node Name Configured Status ------------ ------------------------ ------------------------ mprac2 no passed mprac1 no passed Node Name Running? Status ------------ ------------------------ ------------------------ mprac2 no passed mprac1 no passed Verifying Daemon "proxyt" not configured and running ...PASSED Verifying User Equivalence ...PASSED Verifying RPM Package Manager database ...PASSED Verifying /dev/shm mounted as temporary file system ...PASSED Verifying File system mount options for path /var ...PASSED Verifying DefaultTasksMax parameter ...PASSED Verifying zeroconf check ...PASSED Verifying ASM Filter Driver configuration ...PASSED Pre-check for cluster services setup was successful. CVU operation performed: stage -pre crsinst Date: Nov 19, 2020 4:56:00 PM CVU home: /u01/app/19.0.0/grid/ User: grid

5. GI(Grid Infrastructure) 설치 실행

mprac1.localdomain@grid:+ASM1:/u01/app/19.0.0/grid> ./gridSetup.sh

인스톨러 실행 화면

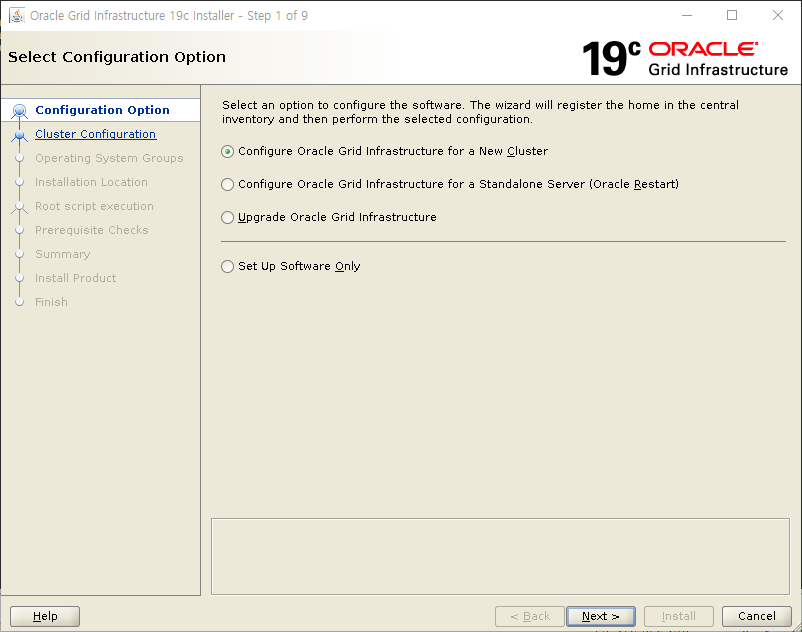

1) Step 1 of 19

Oracle Grid Infrastructure 인스톨을 진행한다.

신규 Grid Infrastructure 구성을 선택한다.

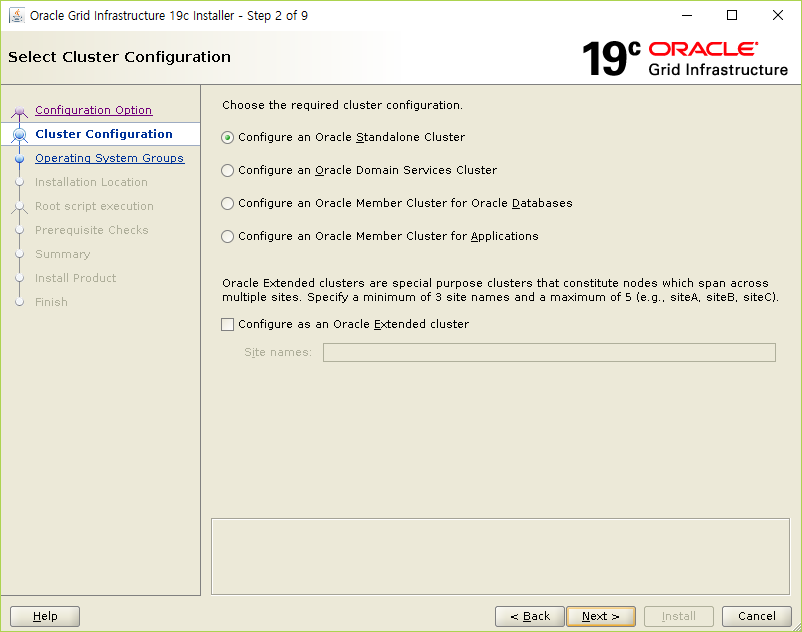

2) Step 2 of 19

Standalone Cluster 구성을 선택한다.

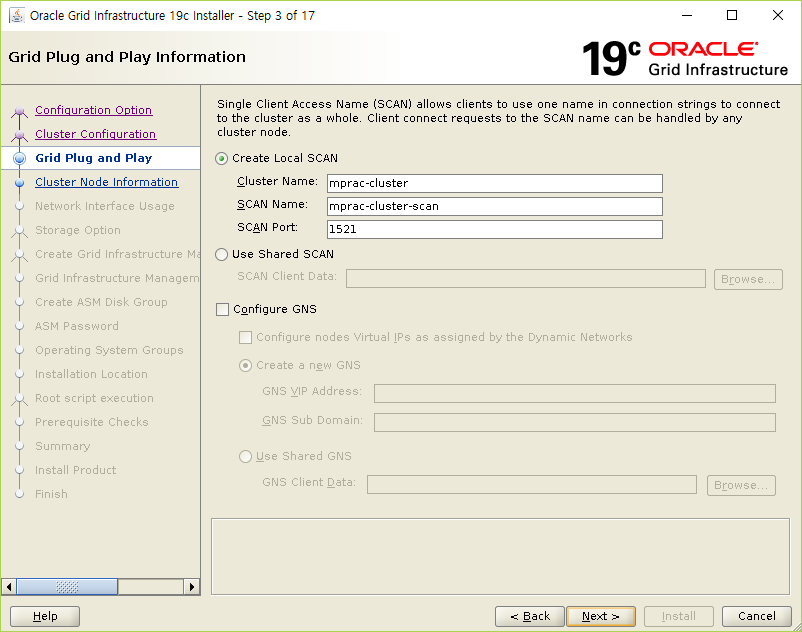

3) Step 3 of 19

Local SCAN 정보를 확인한다.

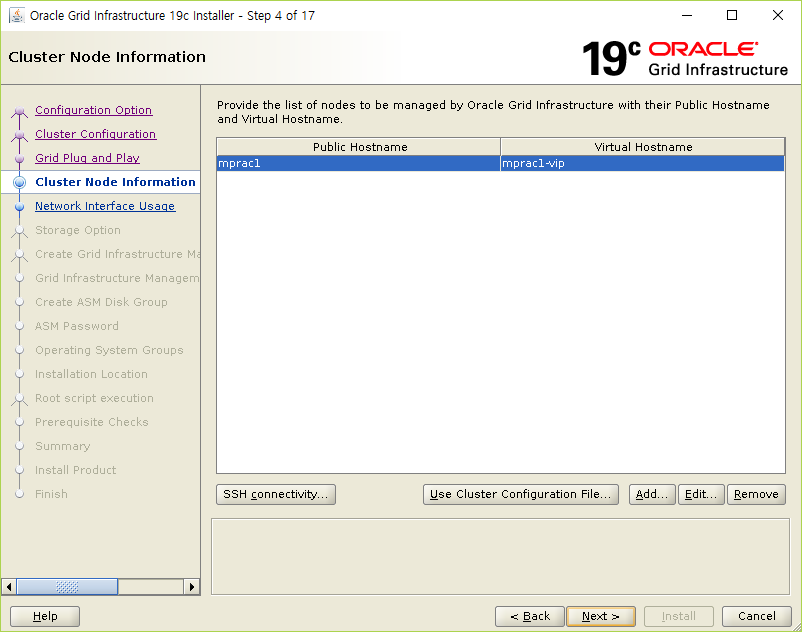

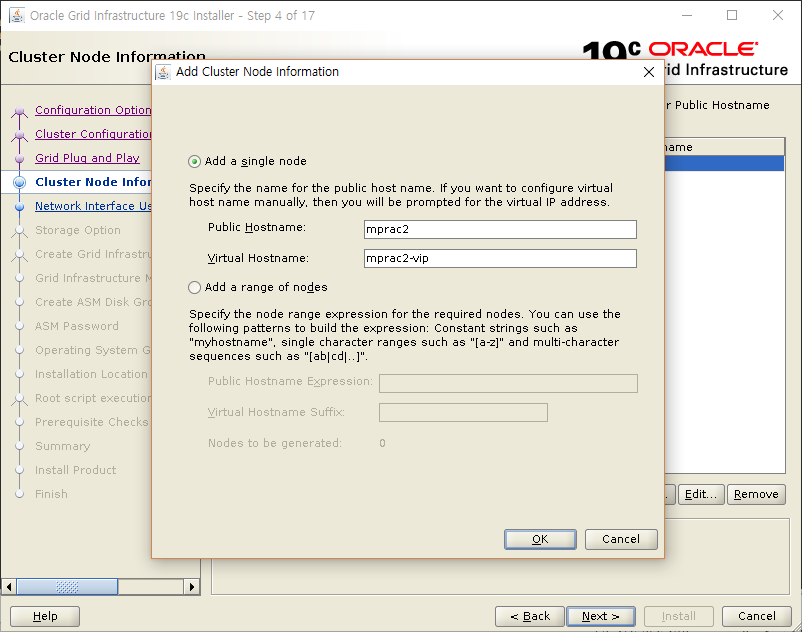

4) Step 4 of 19

노드리스트를 확인한다.(2번 노드가 리스트업되지 않았다)

Add 버튼을 통해 아래와 같이 2번 노드를 추가해 준다.

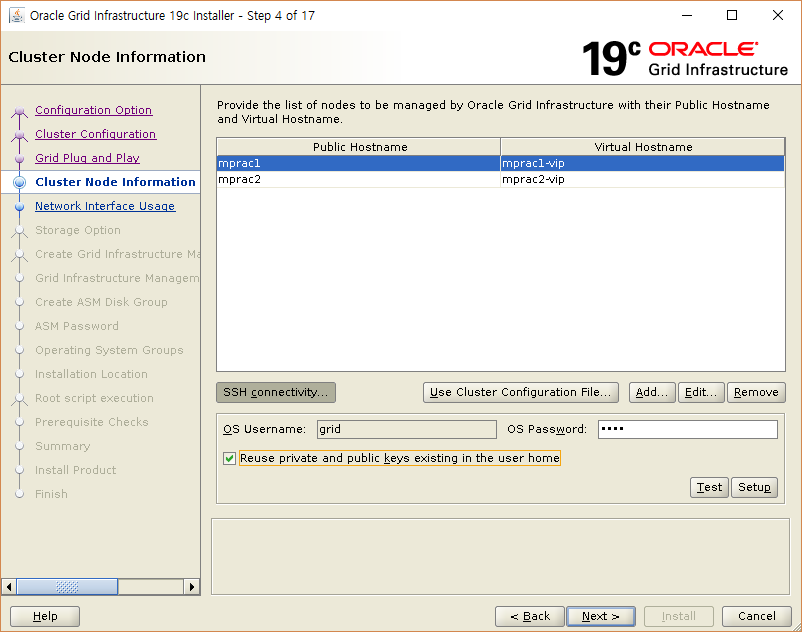

2번 노드가 추가된 것을 확인한다.

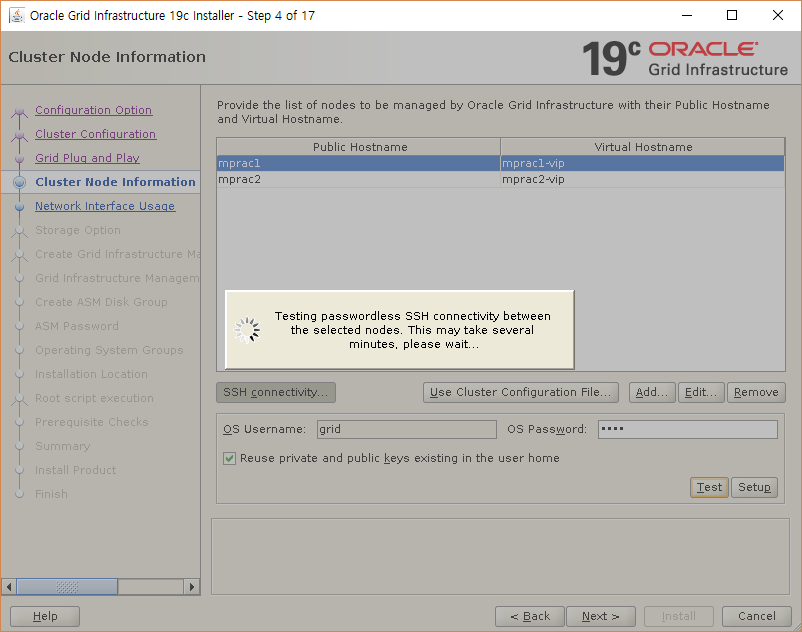

Next로 진행하면 노드간 접속이 원활한지 Connectivity를 테스트한다.

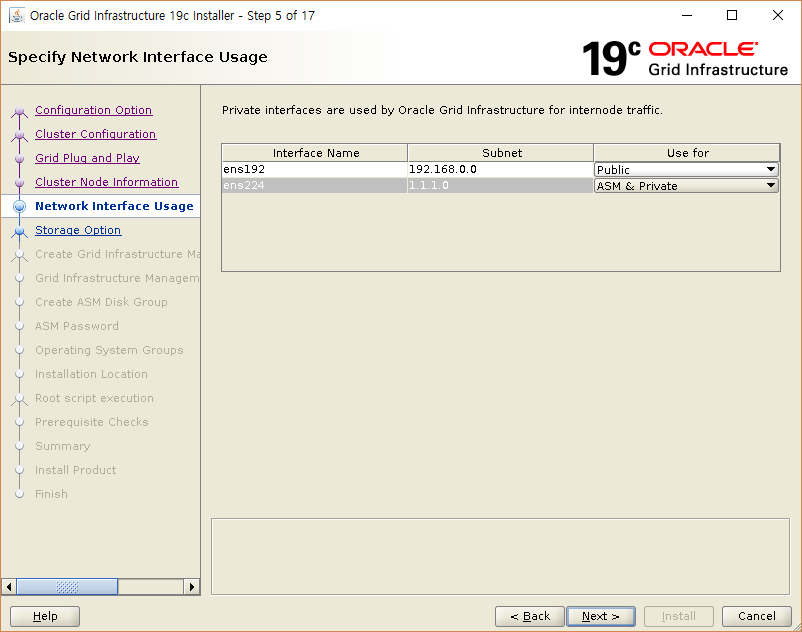

5) Step 5 of 19

Network 용도를 확인하고 각 Interface 별 용도를 지정해준다.

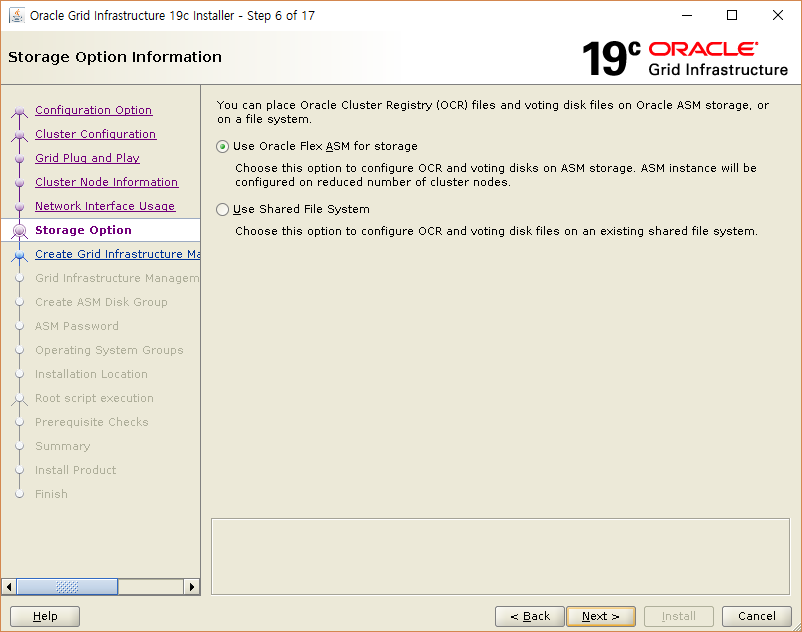

6) Step 6 of 19

사용할 Storage 구성을 선택한다. ASM을 사용할 것이므로 Oracle Flex ASM for storage를 선택한다.

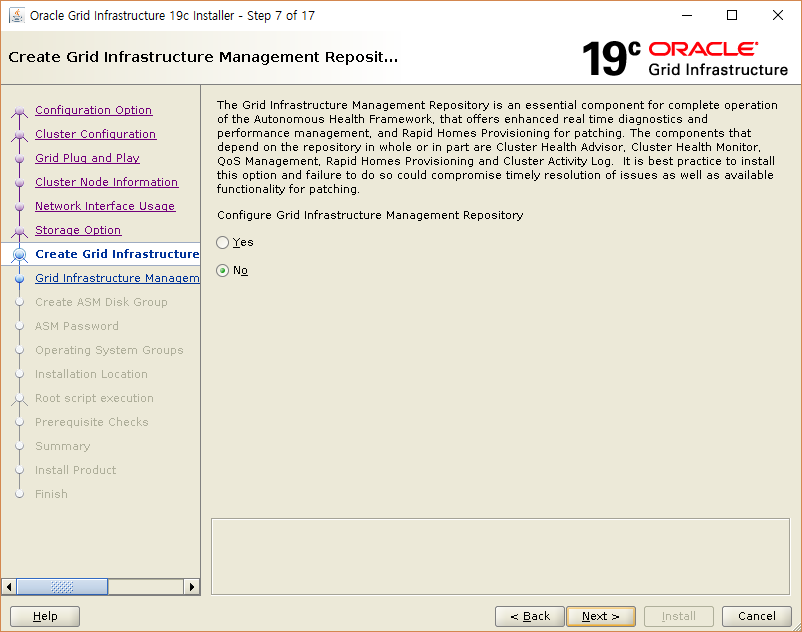

7) Step 7 of 19

GIMR(Grid Infrastructure Management Repository)를 사용하지 않을 것이므로 No를 선택한다.

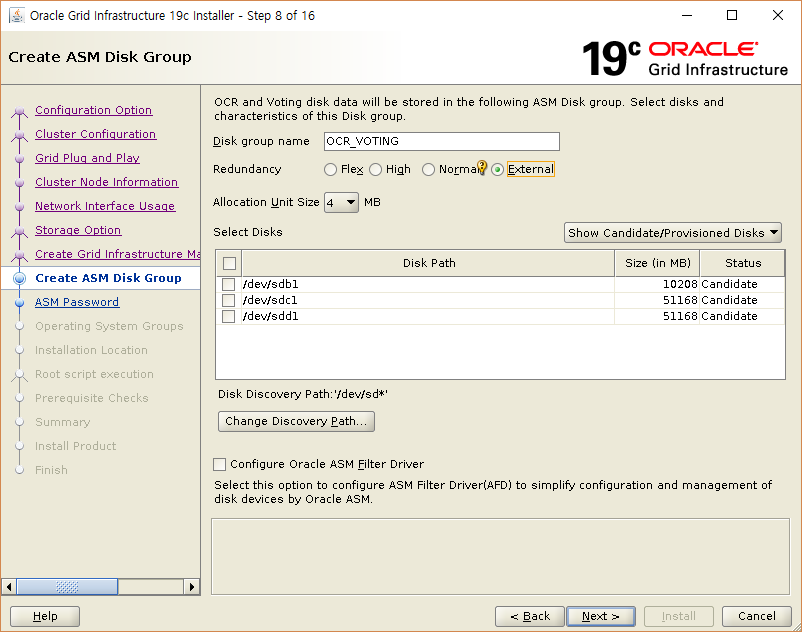

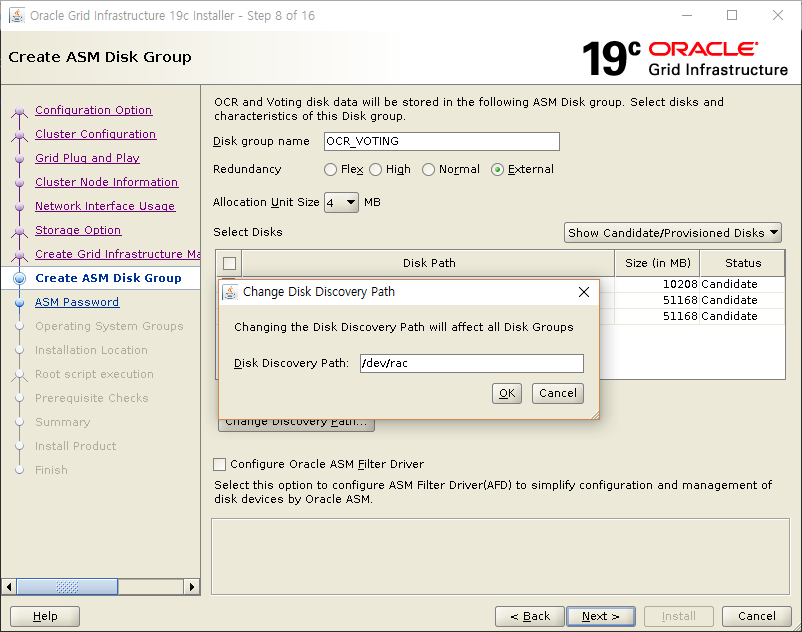

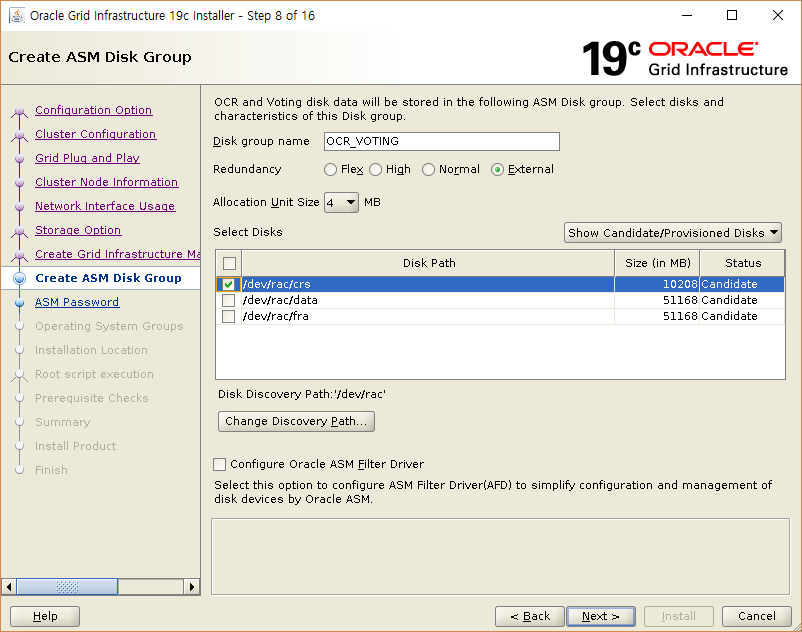

8) Step 8 of 19

OCR과 VOTING disk를 저장할 Disk Group 을 생성하는 화면이다.

Change Discovery Path 버튼을 눌러 이전 설정에서 udev를 통해 설정한 /dev/rac 변경하여 device 명을 찾을 수 있게 해 준다.

/dev/rac/crs 디바이스를 선택해 OCR_VOTING disk group 을 생성한다.

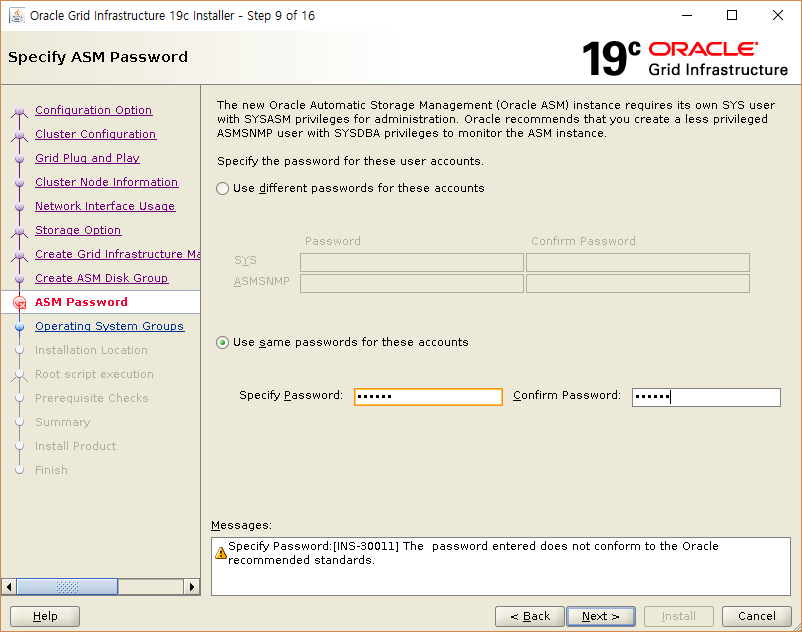

9) Step 9 of 19

ASM 관리 계정에서 사용할 패스워드를 지정한다.

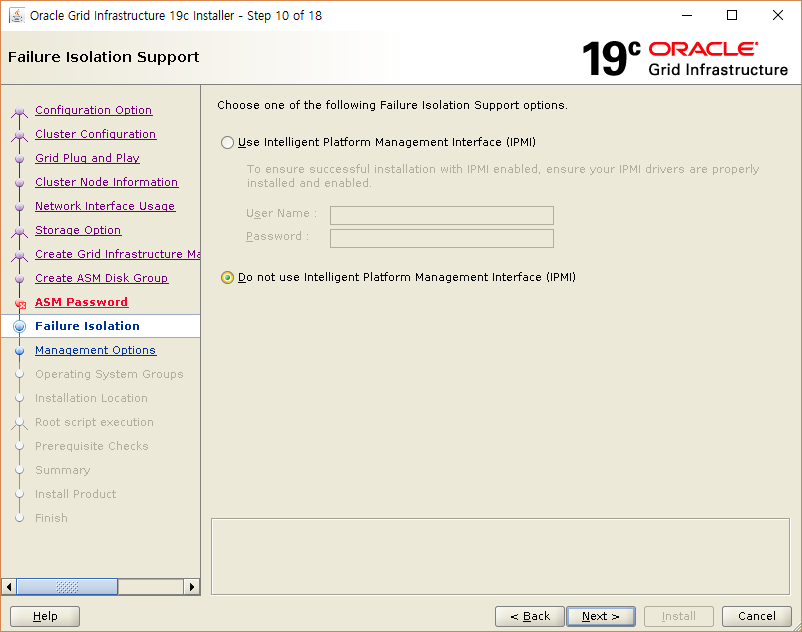

10) Step 10 of 19

IPMI 설정은 사용하지 않는다.

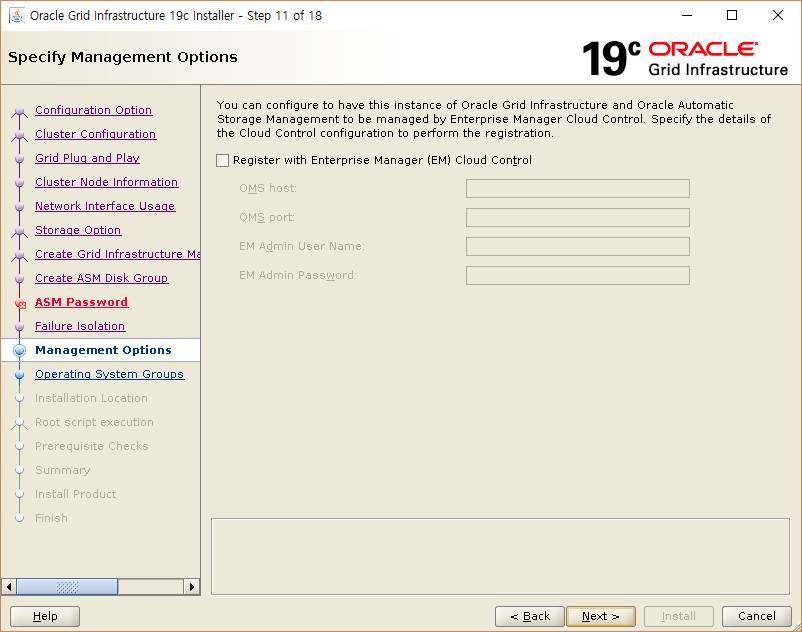

11) Step 11 of 19

EM 설정은 아직 하지 않았으므로 빈 상태로 진행한다.

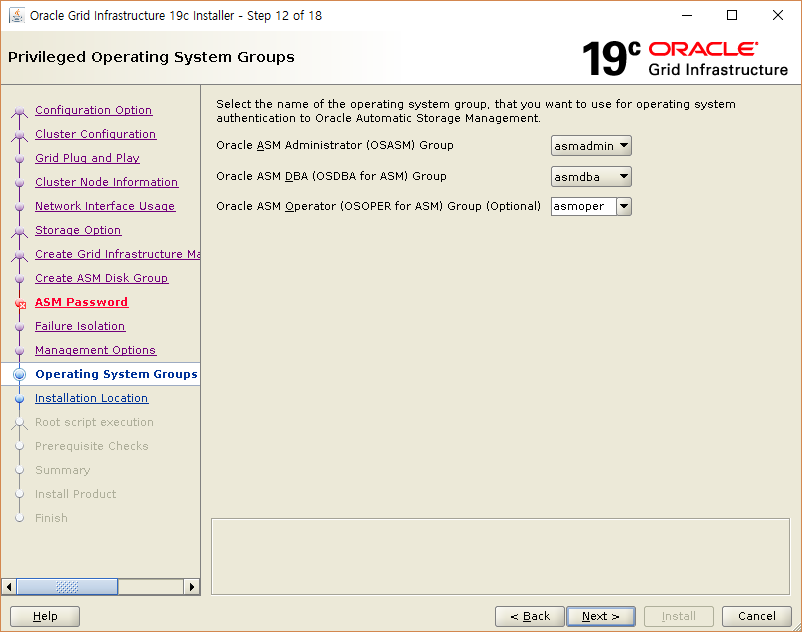

12) Step 12 of 19

ASM에 대한 OS 관리 계정 그룹 지정을 확인한다.

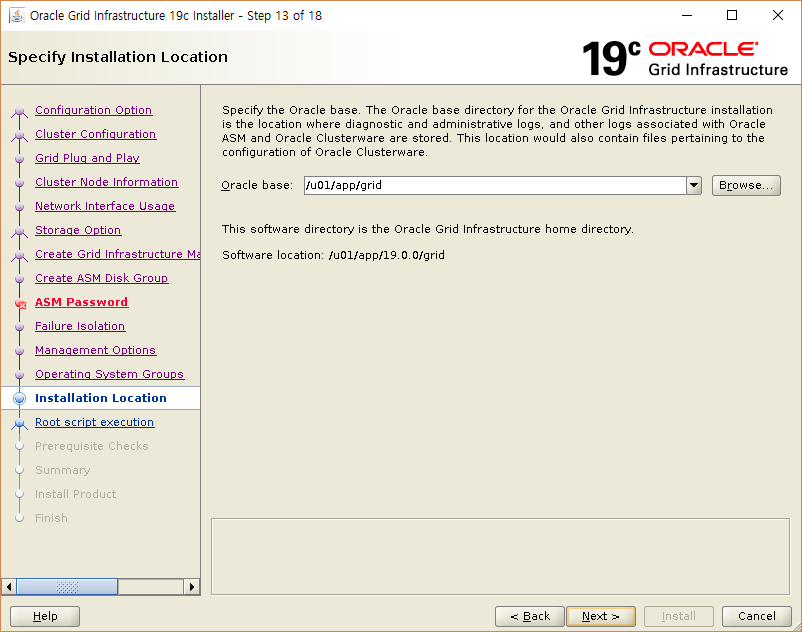

13) Step 13 of 19

Oracle Base 위치를 확인한다.

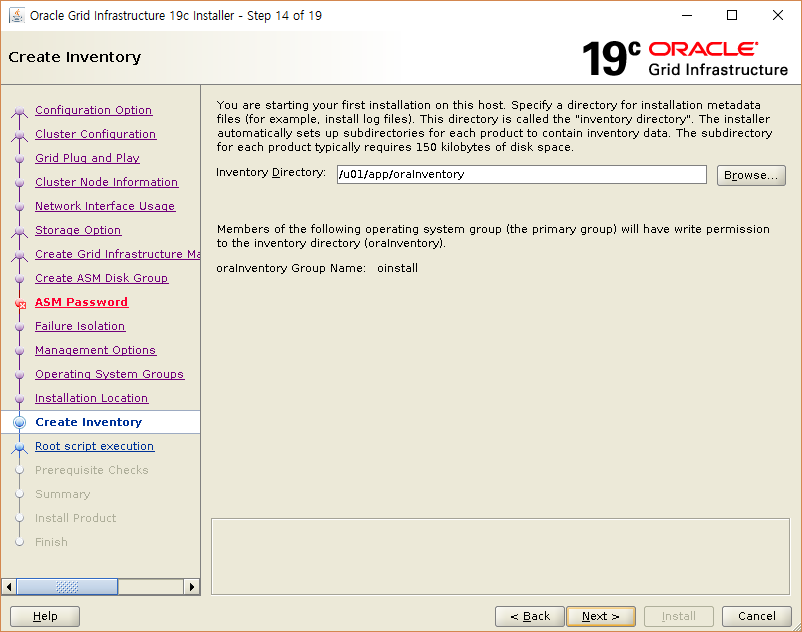

14) Step 14 of 19

Inventory Directory를 확인한다

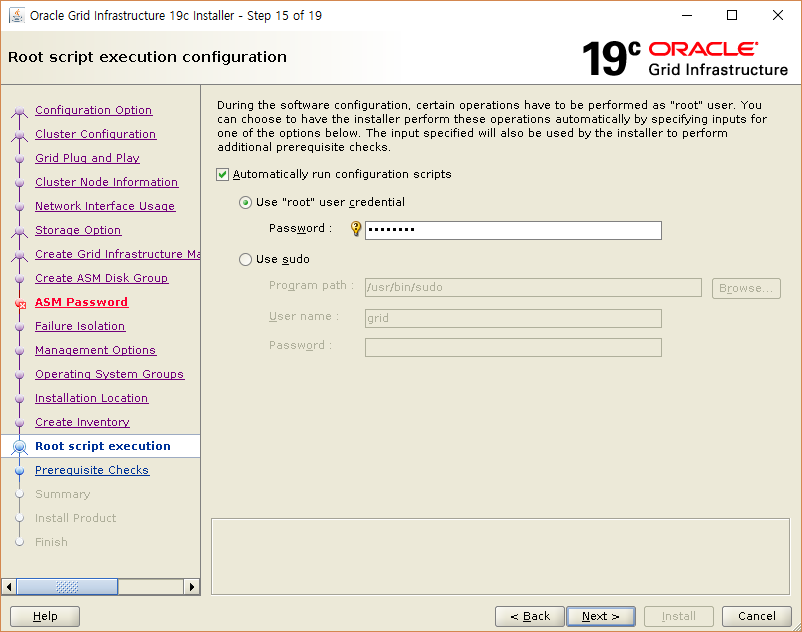

15) Step 15 of 19

root 계정으로 돌려야하는 스크립트 수행 방식을 결정하고 필요에 따라 root 비밀번호를 입력한다.

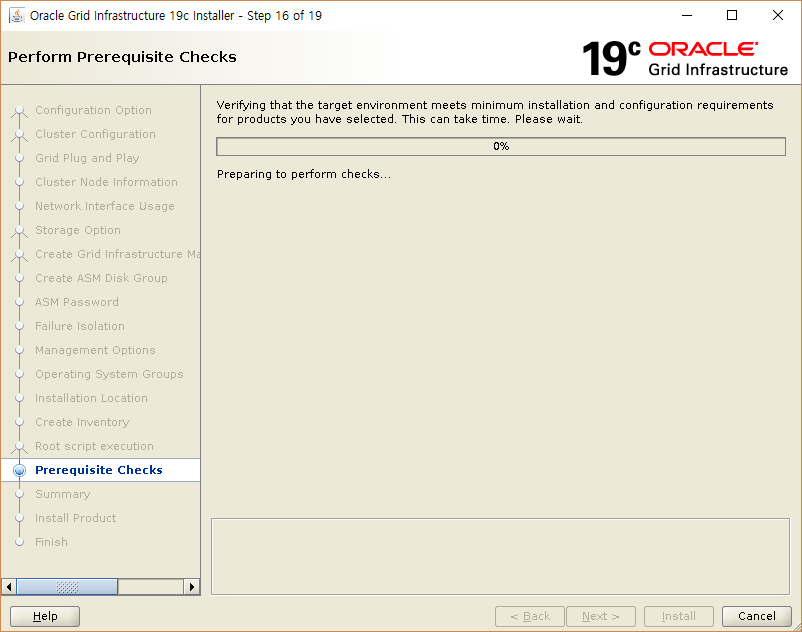

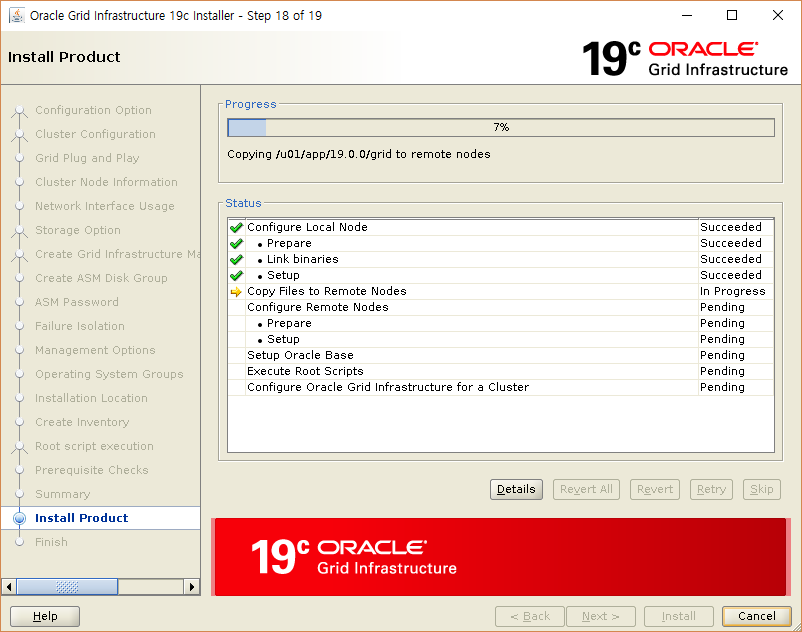

16) Step 16 of 19

runcluvfy 수행과 동일한 점검을 한다.

30% 진행된 모습이다.

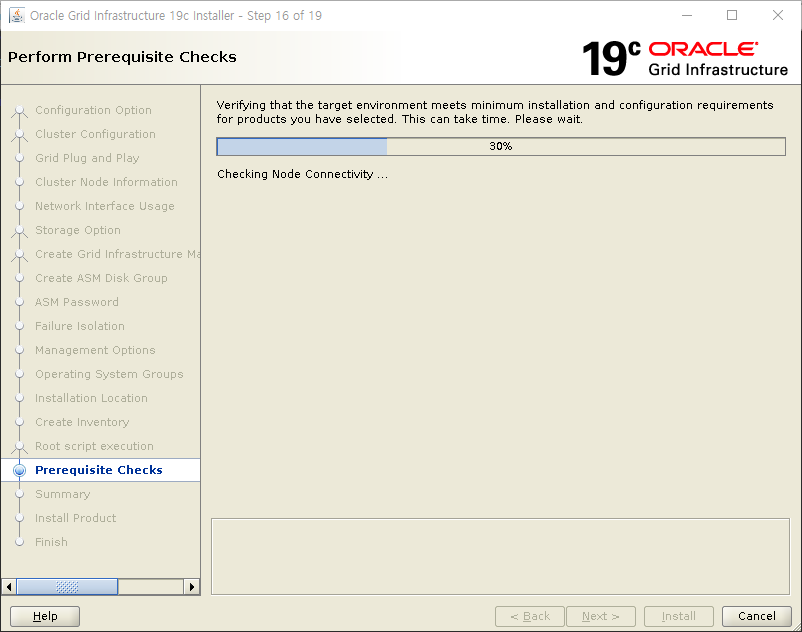

17) Step 17 of 19

설정해온 사항을 확인한다.

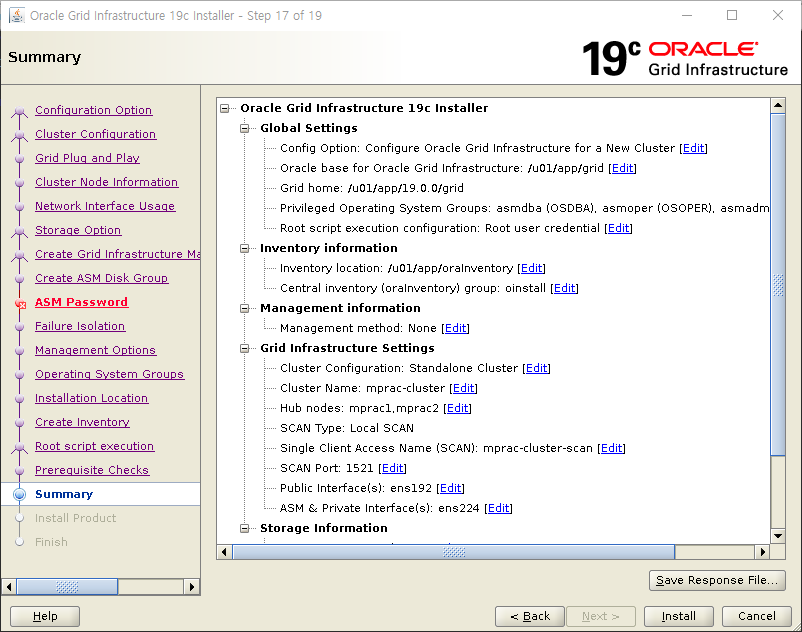

18) Step 18 of 19

실제적인 Install 작업을 수행한다.

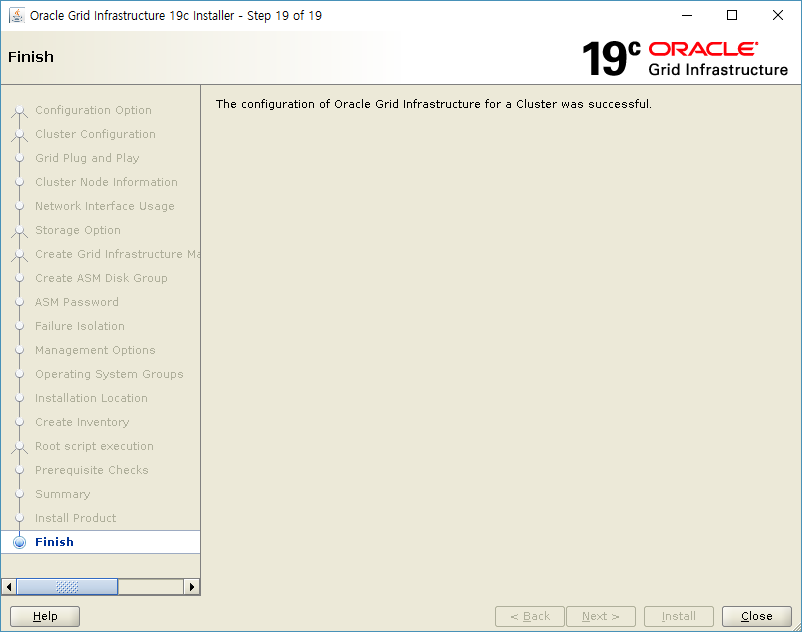

19) Step 19 of 19

정상적으로 완료됐다.

NTP 사용을 할 경우 runcluvfy 와 동일 작업을 수행하는 16 단계에서 warning을 리포트한다.

또 18 단계 이전에서 root로 수행하는 과정에서 에러표시가 되기도 한다. 하지만 무시하고 진행해도 설치에는 문제가 생기지는 않는다.

NTP 또는 chrony로 진행되는 Time Sync에 해당하는 부분에서 에러내지 경고가 발생하지만 무시해고 진행을 해도 된다.

이번 설치시에는 위의 에러와 경고를 피하기 위해서 오라클 CTSS 서비스를 사용하려고 NTP와 chrony 를 다 비활성화 하고 진행해서

에러가 발생하지 않고 깔끔하게 완료되었다.

6. Grid Infrastructure 상태 확인

mprac1.localdomain@grid:+ASM1:/home/grid> crsctl status res -t

--------------------------------------------------------------------------------

Name Target State Server State details

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.LISTENER.lsnr

ONLINE ONLINE mprac1 STABLE

ONLINE ONLINE mprac2 STABLE

ora.chad

ONLINE ONLINE mprac1 STABLE

ONLINE ONLINE mprac2 STABLE

ora.net1.network

ONLINE ONLINE mprac1 STABLE

ONLINE ONLINE mprac2 STABLE

ora.ons

ONLINE ONLINE mprac1 STABLE

ONLINE ONLINE mprac2 STABLE

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.ASMNET1LSNR_ASM.lsnr(ora.asmgroup)

1 ONLINE ONLINE mprac1 STABLE

2 ONLINE ONLINE mprac2 STABLE

3 OFFLINE OFFLINE STABLE

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE mprac2 STABLE

ora.LISTENER_SCAN2.lsnr

1 ONLINE ONLINE mprac1 STABLE

ora.LISTENER_SCAN3.lsnr

1 ONLINE ONLINE mprac1 STABLE

ora.OCR_VOTING.dg(ora.asmgroup)

1 ONLINE ONLINE mprac1 STABLE

2 ONLINE ONLINE mprac2 STABLE

3 OFFLINE OFFLINE STABLE

ora.asm(ora.asmgroup)

1 ONLINE ONLINE mprac1 Started,STABLE

2 ONLINE ONLINE mprac2 Started,STABLE

3 OFFLINE OFFLINE STABLE

ora.asmnet1.asmnetwork(ora.asmgroup)

1 ONLINE ONLINE mprac1 STABLE

2 ONLINE ONLINE mprac2 STABLE

3 OFFLINE OFFLINE STABLE

ora.cvu

1 ONLINE ONLINE mprac1 STABLE

ora.mprac1.vip

1 ONLINE ONLINE mprac1 STABLE

ora.mprac2.vip

1 ONLINE ONLINE mprac2 STABLE

ora.qosmserver

1 ONLINE ONLINE mprac1 STABLE

ora.scan1.vip

1 ONLINE ONLINE mprac2 STABLE

ora.scan2.vip

1 ONLINE ONLINE mprac1 STABLE

ora.scan3.vip

1 ONLINE ONLINE mprac1 STABLE

--------------------------------------------------------------------------------

crs와 cluster 상태 확인

mprac1.localdomain@grid:+ASM1:/home/grid> crsctl check crs CRS-4638: Oracle High Availability Services is online CRS-4537: Cluster Ready Services is online CRS-4529: Cluster Synchronization Services is online CRS-4533: Event Manager is online mprac1.localdomain@grid:+ASM1:/home/grid> crsctl check cluster -all ************************************************************** mprac1: CRS-4537: Cluster Ready Services is online CRS-4529: Cluster Synchronization Services is online CRS-4533: Event Manager is online ************************************************************** mprac2: CRS-4537: Cluster Ready Services is online CRS-4529: Cluster Synchronization Services is online CRS-4533: Event Manager is online *********************************************